ChatGPT and GPT-4 have been dazzling their users with their linguistic prowess. But, have you ever wondered if (and how) these large language models (LLMs) are also making a splash in the darker corners of the internet? It turns out that threat actors are indeed finding creative ways to exploit the technological marvels of generative AI.

Threat Actors and Generative AI

Let’s see how malicious actors are stirring up trouble with LLMs:

ChatGPT Isn’t Your Trustworthy Chemistry Partner

Picture this: a daring dark web forum user attempts to harness ChatGPT’s knowledge for crafting illicit drugs:

Then, another forum user replies with their input:

At Flare we generally advise against taking pharmaceutical advice of any kind from LLMs!

Even the Dark Web Can’t Resist a Good Marketing Scheme

Scams promising “passive income” plague various social media platforms, and the dark web isn’t immune to them either. The dark web has its fair share of unsavory advertisements, with scams even pledging to reveal the secrets of unimaginable wealth for a mere $99 in Bitcoin. Not even the most secretive corners of the internet can escape the lure of a convincing sales pitch.

On a More Serious Note…

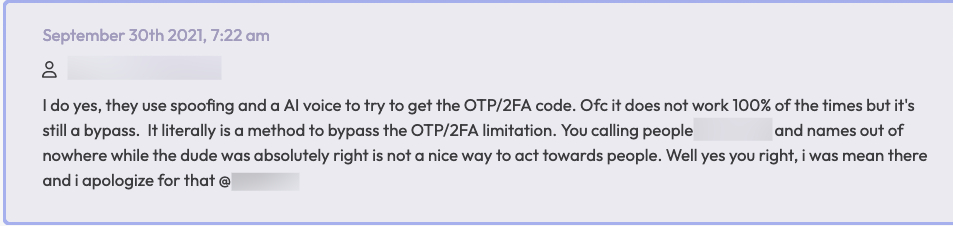

Malicious actors appear to be devising strategies to exploit AI for voice spoofing scams. As we unfortunately anticipated, cybercriminals are harnessing text-to-speech AI algorithms to create convincing human-like voices, sometimes even imitating specific individuals. These realistic voices are then used to extract sensitive information, such as 2FA codes, from unsuspecting victims.

As AI algorithms become more accessible and widespread, we can only expect this trend to persist and escalate. This highlights the importance of remaining vigilant and raising awareness about the potential misuse of AI technology by threat actors of varying sophistication.

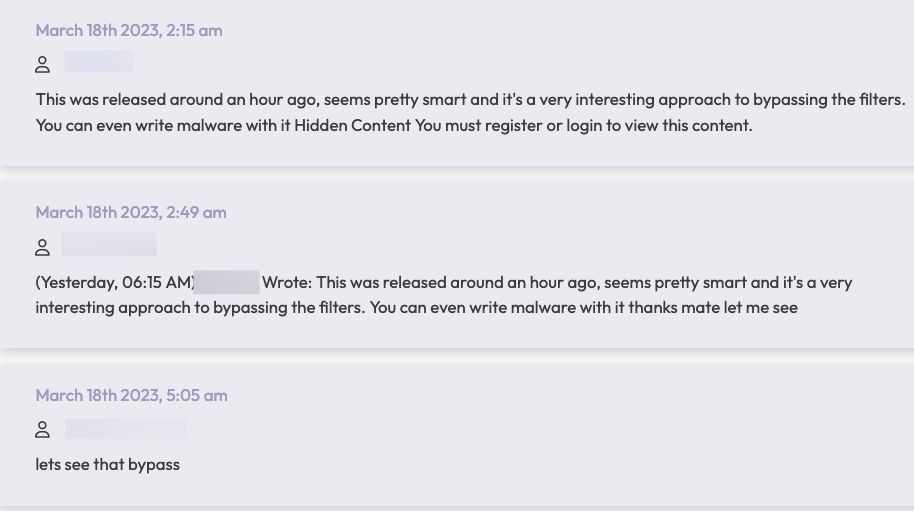

New Ways to Bypass Filters by Threat Actors

As some ChatGPT and GPT-4 users know, it can sometimes respond that it’s “only an AI language model trained by OpenAI” for even some harmless requests. Threat actors seem to be giving out and selling new “jailbreaks” that enable others to use ChatGPT to write malware and perform other malicious tasks.

Cybercrime and Generative AI: What Does this Mean?

Even though there can be comical attempts at drug synthesis, the misuse of LLMs like ChatGPT and GPT-4 for cybercrime is no laughing matter. The dark web is abuzz with creative ways to exploit these powerful tools.

As these AI algorithms continue to evolve and gain prominence, it is crucial for both organizations and individuals to recognize the potential dangers they pose. Cybersecurity professionals must stay vigilant and constantly adapt their strategies to counter and stay ahead with the ever-changing threat landscape.

Meanwhile, individuals should exercise caution when encountering suspicious communications, even if it sounds authentic. When in doubt, double-checking the source and avoiding sharing sensitive information could create obstacles with a threat actor’s cybercrime attempts.

Generative AI and Flare

Malicious actors are already imagining and trying out cybercrime strategies involving generative AI as seen in the examples above. However, LLM tools are a testament to human ingenuity and the immense positive potential of AI. It’s our collective responsibility to ensure that these capabilities are for our collective benefit, and not to the detriment of the digital landscape.

Our approach at Flare is to embrace generative AI and its possibilities, and evolve along with it to provide cyber teams with the advantage. LLMs can be incorporated into cyber threat intelligence to be an essential capability to more rapidly and accurately assess threats.

Take a look at the AI Powered Assistant’s capabilities for yourself!